This is part 2 about building container images for self-hosted runners in GitHub and deploying to Azure Container Apps. If you haven’t checked out part 1, do that first.

This post will be building upon the last setup, focusing on auto-scaling the Azure Container App and virtual network integration for the Azure Container Apps environment.

Why scale container apps

Having container app replicas ready and warm makes workflow jobs start quickly since there isn’t any need for instances to initialize. But this privilege comes with a cost…

It’s possible to reduce cost by change the scaling rule setting to reduce the number of available runners during off-hours. But it would be better to scale to zero and only initialize runners when a workflow job requests it.

Utilize jobs in Azure Container Apps in combination with KEDA to initialize runners based on events. KEDA has the Github Runner Scaler for scaling based on the number of queued jobs in GitHub Actions.

Why virtual network integration

Azure Container Apps runs inside of a managed container environment. The default vnet for this environment is automatically generated and managed by Azure. This means the vnet is inaccessible for the customer, and it won’t be able to communicate privately with other customer vnets, such as a hub.

Benefits by utilizing your own virtual network:

- able to peer with other vnets

- create subnets with service endpoints

- control the egress with user defined routes

- have the containers communicate with private endpoints

Setup

Once a managed container environment is created it is not possible to change the network.

Build the infrastructure (Bicep)

Use the bicep template from the previous post as a base and make these changes:

- replace the

Azure Container AppwithAzure Container App Job - add a vnet with a subnet

- update the

managed-environmentwith the propertyinfrastructureSubnetId- set the subnet resource ID as the value

- optionally add a storage account with a container for testing purposes

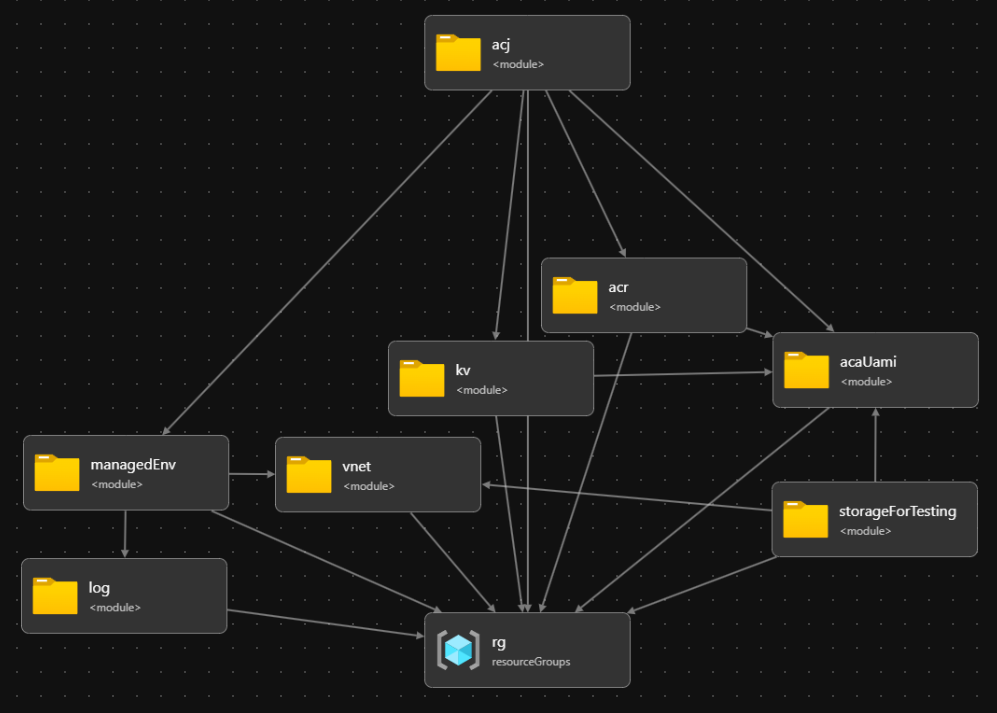

The bicep template visualized looks like this:

Here is a more detailed look at how the vnet, container app environment, container app job and storage account are configured in the bicep template:

1targetScope = 'subscription'

2

3resource rg 'Microsoft.Resources/resourceGroups@2024-03-01' = {...}

4module acaUami 'br/public:avm/res/managed-identity/user-assigned-identity:0.2.2' = {...}

5module acr 'br/public:avm/res/container-registry/registry:0.3.1' = {...}

6module kv 'br/public:avm/res/key-vault/vault:0.6.2' = {...}

7module log 'br/public:avm/res/operational-insights/workspace:0.4.0' = {...}

8

9module vnet 'br/public:avm/res/network/virtual-network:0.1.8' = {

10 name: '${uniqueString(deployment().name, parLocation)}-vnet'

11 scope: rg

12 params: {

13 name: parManagedEnvironmentVnetName

14 addressPrefixes: ['10.20.0.0/16']

15 subnets: [

16 {

17 name: parManagedEnvironmentInfraSubnetName

18 addressPrefix: '10.20.0.0/23'

19 delegations: [

20 {

21 name: 'Microsoft.App.environments'

22 properties: { serviceName: 'Microsoft.App/environments' }

23 }

24 ]

25 serviceEndpoints: [

26 { service: 'Microsoft.Storage' }

27 ]

28 }

29 ]

30 }

31}

32

33module managedEnv 'br/public:avm/res/app/managed-environment:0.5.2' = {

34 scope: rg

35 name: '${uniqueString(deployment().name, parLocation)}-managed-environment'

36 params: {

37 name: parManagedEnvironmentName

38 logAnalyticsWorkspaceResourceId: log.outputs.resourceId

39 infrastructureResourceGroupName: parManagedEnvironmentInfraResourceGroupName

40 infrastructureSubnetId: first(vnet.outputs.subnetResourceIds)

41 internal: true

42 zoneRedundant: false

43 workloadProfiles: [

44 {

45 name: 'Consumption'

46 workloadProfileType: 'Consumption'

47 }

48 ]

49 }

50}

51

52module acj 'br/public:avm/res/app/job:0.3.0' = {

53 scope: rg

54 name: '${uniqueString(deployment().name, parLocation)}-acj'

55 params: {

56 name: parAcjName

57 environmentResourceId: managedEnv.outputs.resourceId

58 containers: [

59 ...

60 ]

61 secrets: [

62 ...

63 ]

64 registries: [

65 ...

66 ]

67 triggerType: 'Event'

68 eventTriggerConfig: {

69 scale: {

70 rules: [

71 {

72 name: 'github-runner-scaling-rule'

73 type: 'github-runner'

74 auth: [

75 {

76 triggerParameter: 'personalAccessToken'

77 secretRef: varSecretNameGitHubAccessToken

78 }

79 ]

80 metadata: {

81 githubApiURL: 'https://api.github.com'

82 runnerScope: 'repo'

83 owner: parGitHubRepoOwner

84 repos: parGitHubRepoName

85 labels: 'self-hosted'

86 }

87 }

88 ]

89 }

90 }

91 managedIdentities: {

92 userAssignedResourceIds: [acaUami.outputs.resourceId]

93 }

94 }

95}

96

97module storageForTesting 'br/public:avm/res/storage/storage-account:0.9.1' = if (parTestVnetServiceEndpoint != null) {

98 scope: rg

99 name: '${uniqueString(deployment().name, parLocation)}-storage-account'

100 params: {

101 name: parTestVnetServiceEndpoint.?storageAccountName!

102 skuName: 'Standard_LRS'

103 blobServices: {

104 containers: [

105 { name: parTestVnetServiceEndpoint.?containerName }

106 ]

107 }

108 roleAssignments: [

109 {

110 principalId: acaUami.outputs.principalId

111 roleDefinitionIdOrName: 'Storage Blob Data Owner'

112 }

113 ]

114 networkAcls: {

115 defaultAction: 'Deny'

116 virtualNetworkRules: [

117 {

118 action: 'Allow'

119 id: first(vnet.outputs.subnetResourceIds)

120 }

121 ]

122 }

123 }

124}Deploy the bicep template to a subscription with azure-cli or pwsh in your own terminal or create a workflow to handle it.

$deploySplat = @{

Name = "self-hosted-runners-acj-{0}" -f (Get-Date).ToString("yyyyMMdd-HH-mm-ss")

Location = $azRegion

TemplateFile = 'src/bicep/main.bicep'

TemplateParameterFile = 'main.bicepparam'

Verbose = $true

}

Select-AzSubscription -Subscription $azSubscriptionName

New-AzSubscriptionDeployment @deploySplat

Results

Test vnet access

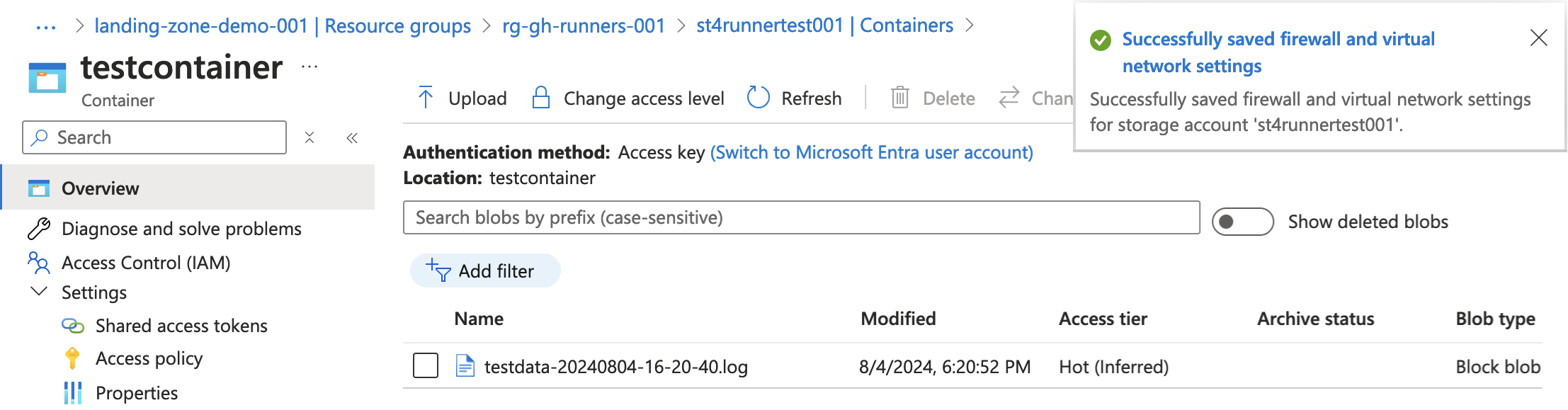

Create a workflow to test the vnet access from inside the runner. Here is a workflow that will attempt to upload a file to a storage account. The storage account is configured to allow traffic only from the container app environment infrastructure subnet. The subnet is configured with a service endpoint for Azure Storage.

1name: Put file in private storage

2on:

3 workflow_dispatch:

4env:

5 PRIVATE_STORAGE_ACCOUNT_NAME: ${{ vars.PRIVATE_STORAGE_ACCOUNT_NAME }}

6 PRIVATE_STORAGE_ACCOUNT_CONTAINER_NAME: ${{ vars.PRIVATE_STORAGE_ACCOUNT_CONTAINER_NAME }}

7

8jobs:

9 put-file-in-private-storage:

10 runs-on: [self-hosted]

11 steps:

12 - name: Install pwsh modules

13 shell: pwsh

14 run: |

15 Install-Module -Name Az.Accounts -RequiredVersion 3.0.2 -Repository PSGallery -Force

16 Install-Module -Name Az.Storage -RequiredVersion 6.1.3 -Repository PSGallery -Force

17 - name: Azure Login

18 shell: pwsh

19 run: |

20 Connect-AzAccount -Identity -AccountId $env:MSI_CLIENT_ID

21 - name: Put blob in container

22 shell: pwsh

23 run: |

24 $fileName = "testdata-{0}.log" -f (Get-Date).ToString("yyyyMMdd-HH-mm-ss")

25 Set-Content -Path $fileName -Value "example content"

26 $stContext = New-AzStorageContext -StorageAccountName $env:PRIVATE_STORAGE_ACCOUNT_NAME

27 $splat = @{

28 File = $fileName

29 Container = $env:PRIVATE_STORAGE_ACCOUNT_CONTAINER_NAME

30 Context = $stContext

31 }

32 Set-AzStorageBlobContent @splat After the workflow finished successfully and adding my public IP to the storage account firewall, it’s possible to see the new file:

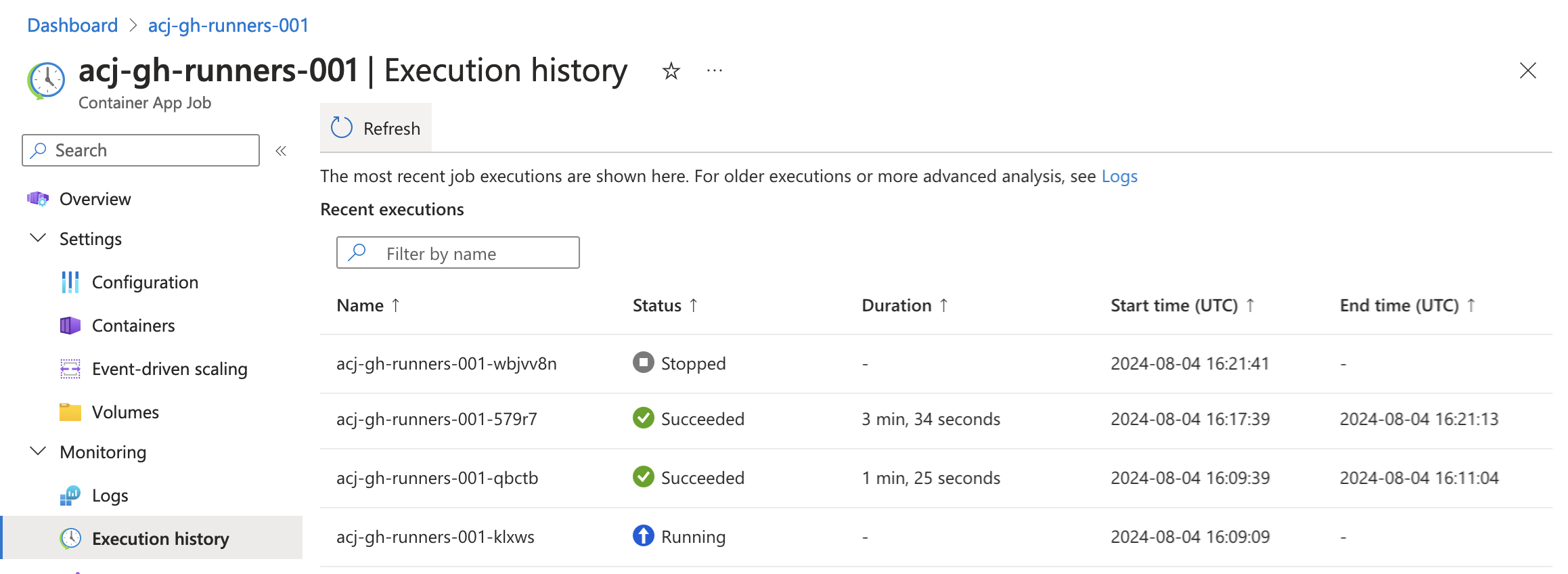

Running a few more workflows and returning to the Azure Portal shows the execution history:

Gotchas

Most of the gotchas I encountered had to do with the managed-environment types and how they differ from each other, mostly around the networking.

Environment types

Azure Container Apps environments comes in two different types:

- Consumption only

- Workload profiles

Some of the noteworthy feature differences:

| Feature | Consumption only | Workload profiles |

|---|---|---|

| supports user defined routes | ❌ | ✅ |

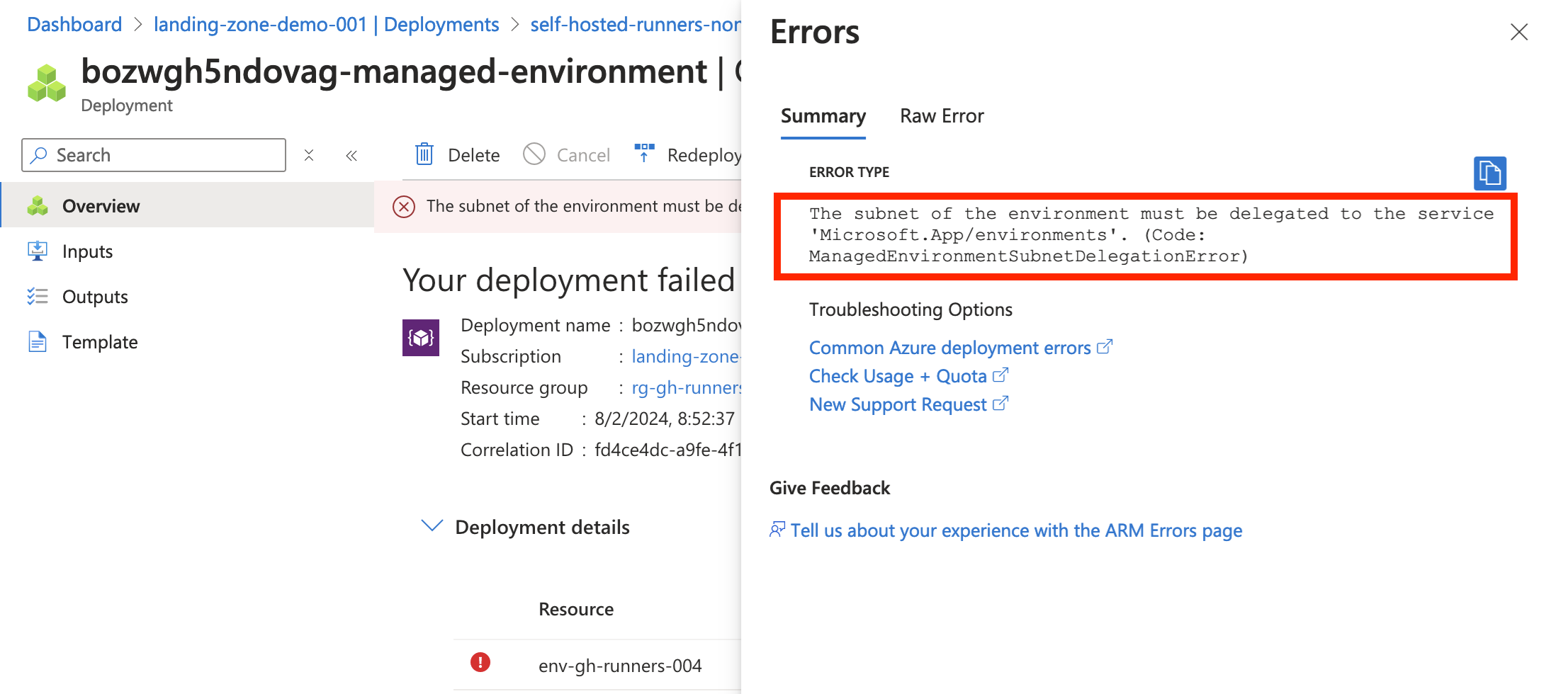

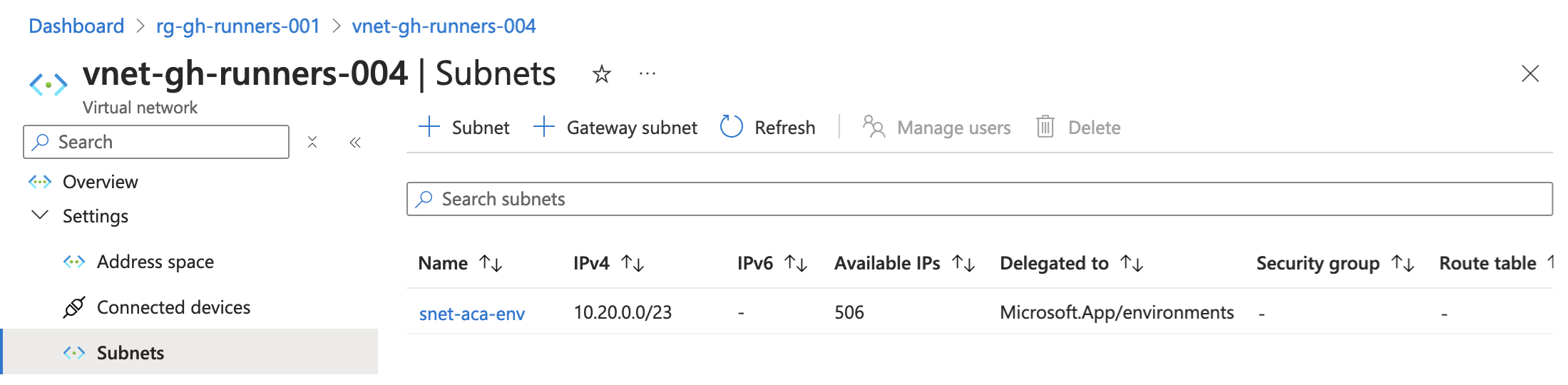

requires subnet to be delegated to Microsoft.App/environments |

❌ | ✅ |

| allows customization of the infrastructureResourceGroupName | ❌ | ✅ |

To build a managed-environment using the AVM module:

- Consumption only → set

workloadProfilesas an empty array - Workload profile → set

workloadProfilesas an array of profile type objects

1module managedEnv 'br/public:avm/res/app/managed-environment:0.5.2' = {

2 ...

3 params: {

4 ...

5 workloadProfiles: [

6 {

7 name: 'Consumption'

8 workloadProfileType: 'Consumption'

9 }

10 ]

11 }

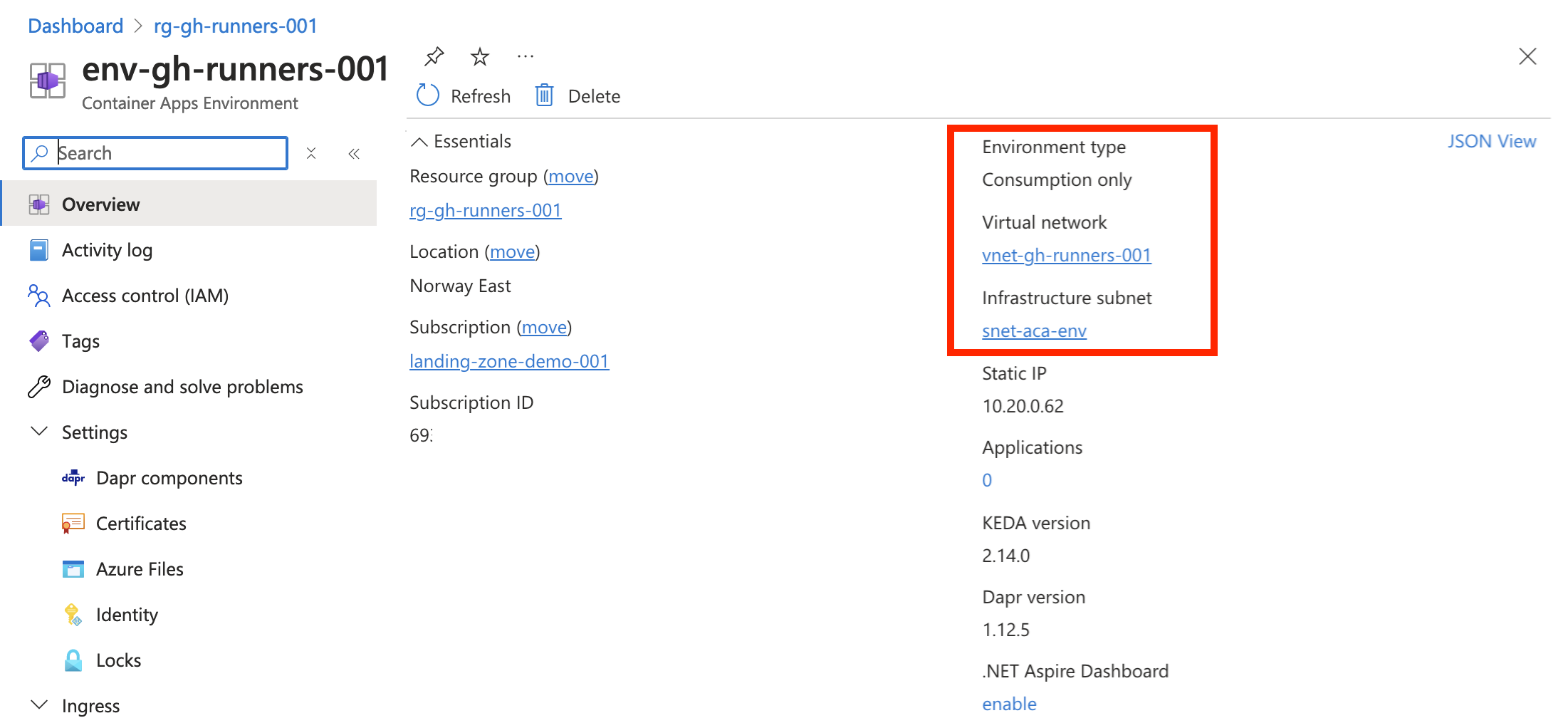

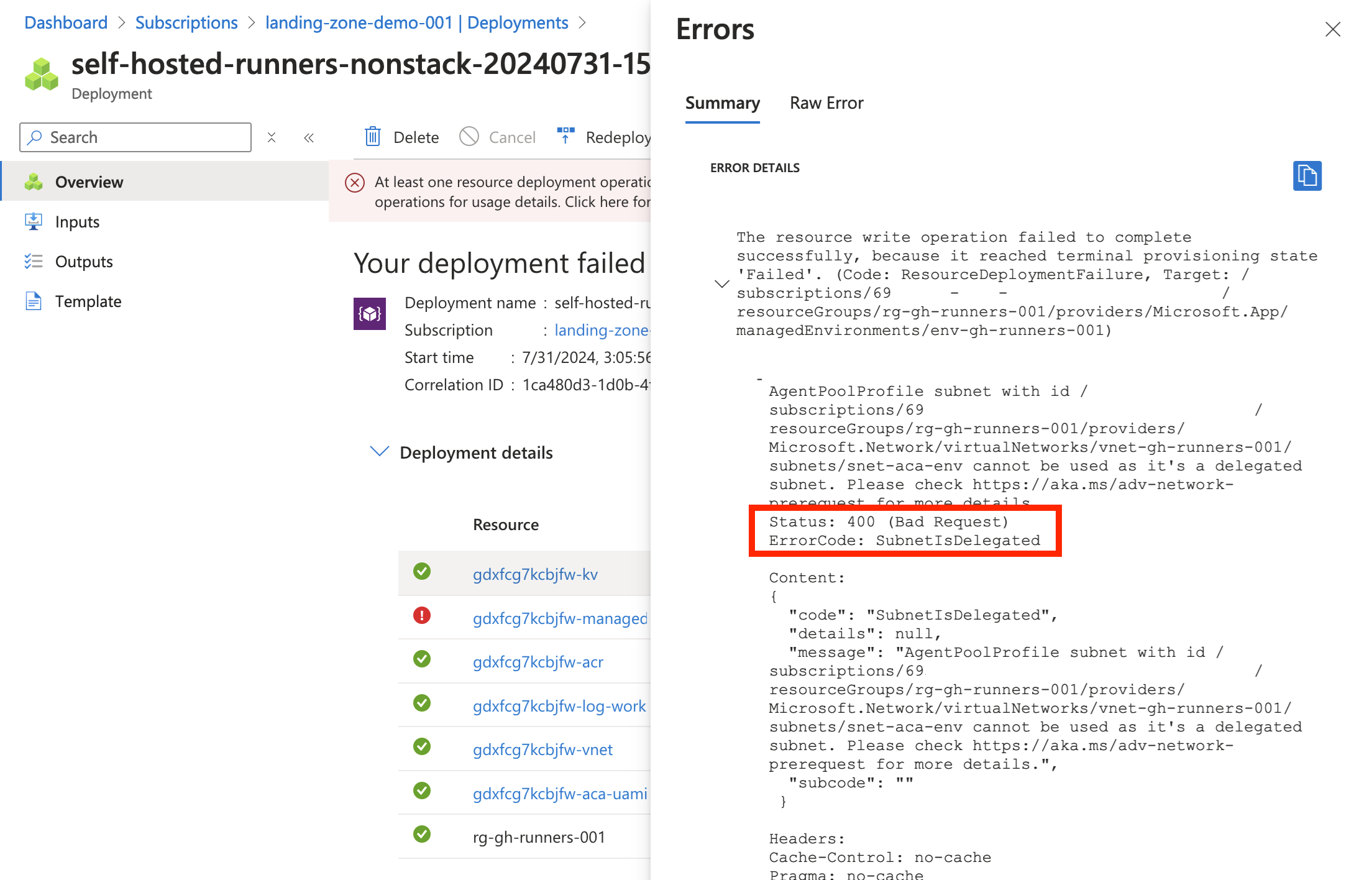

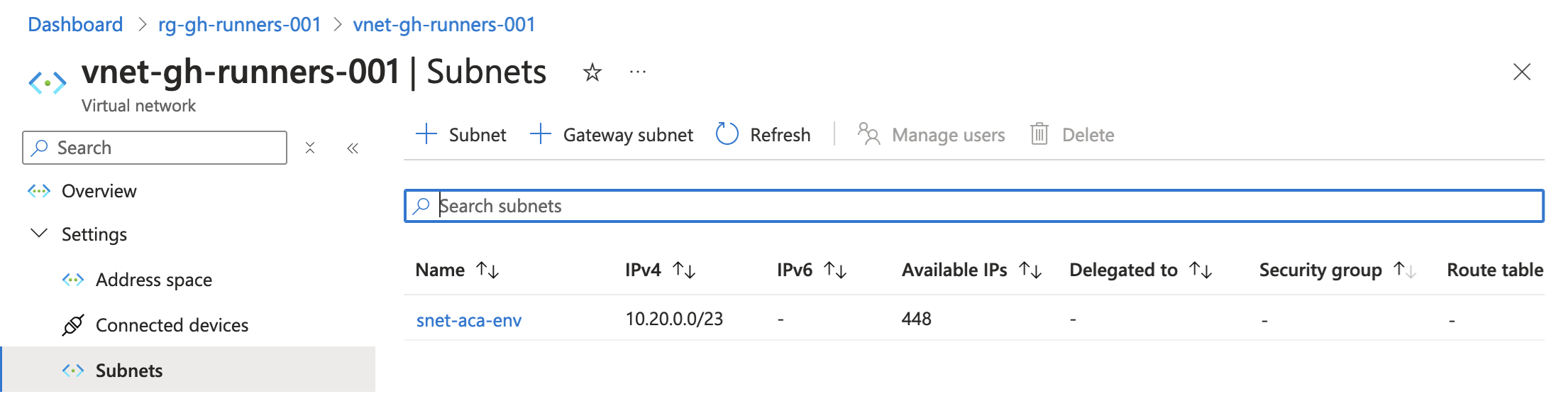

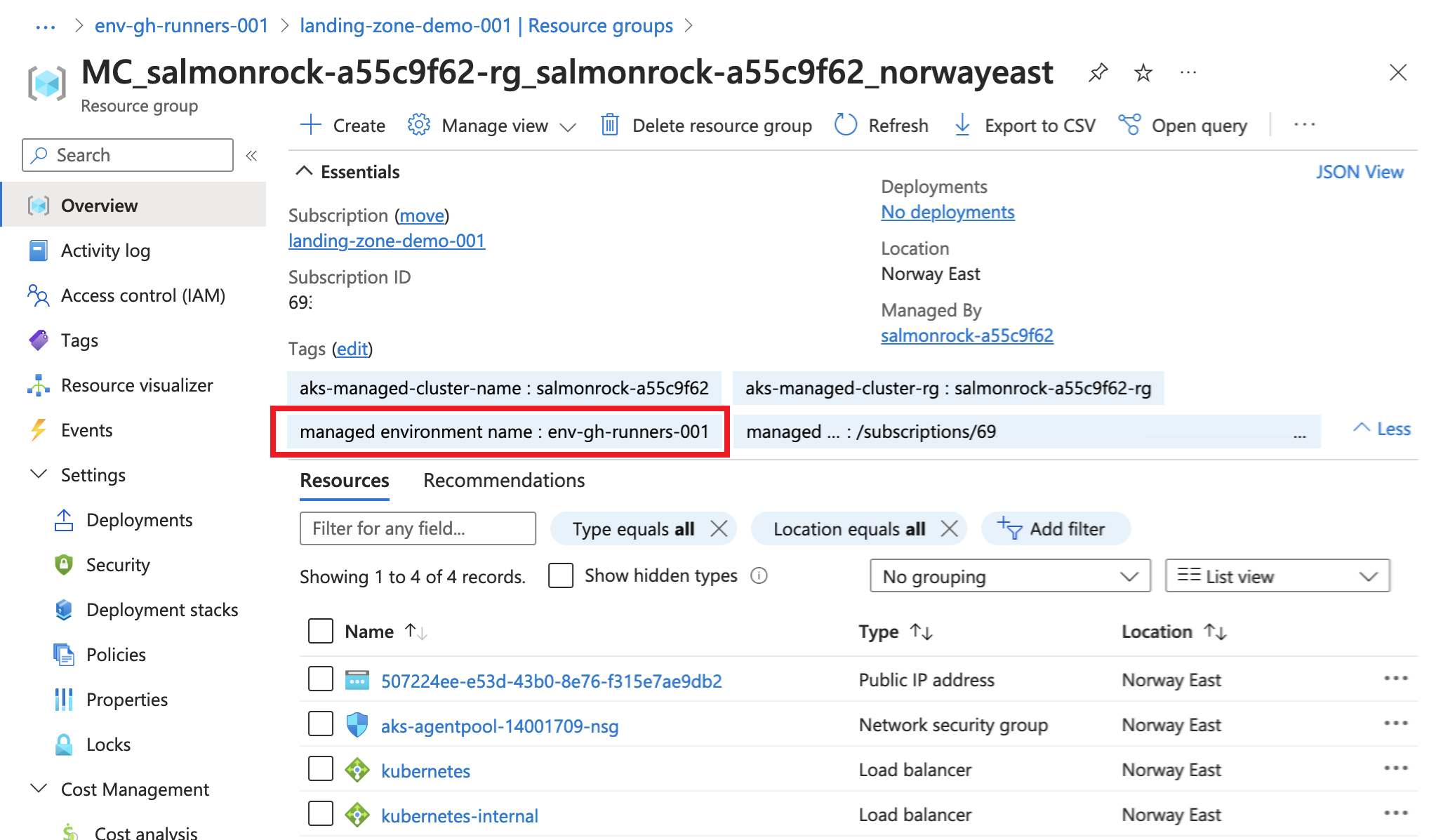

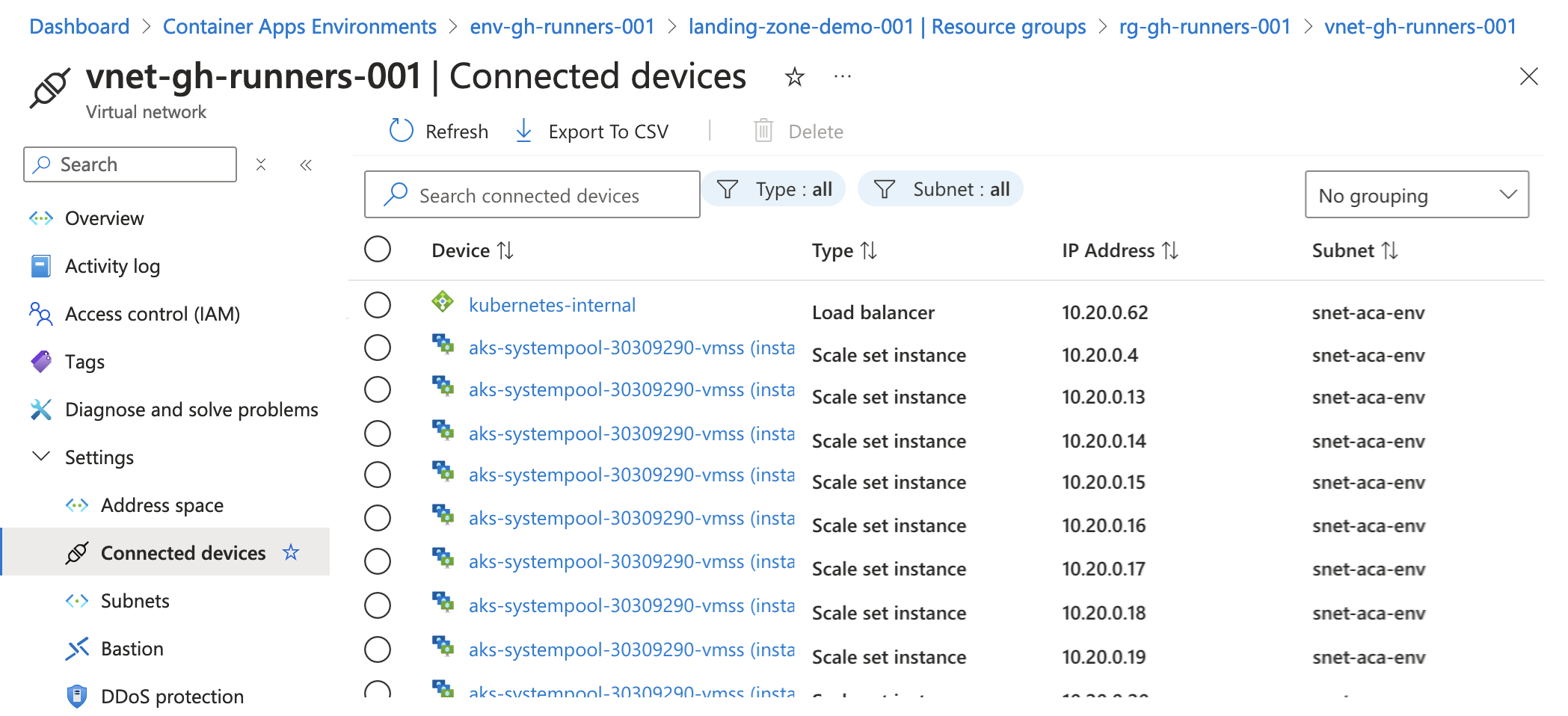

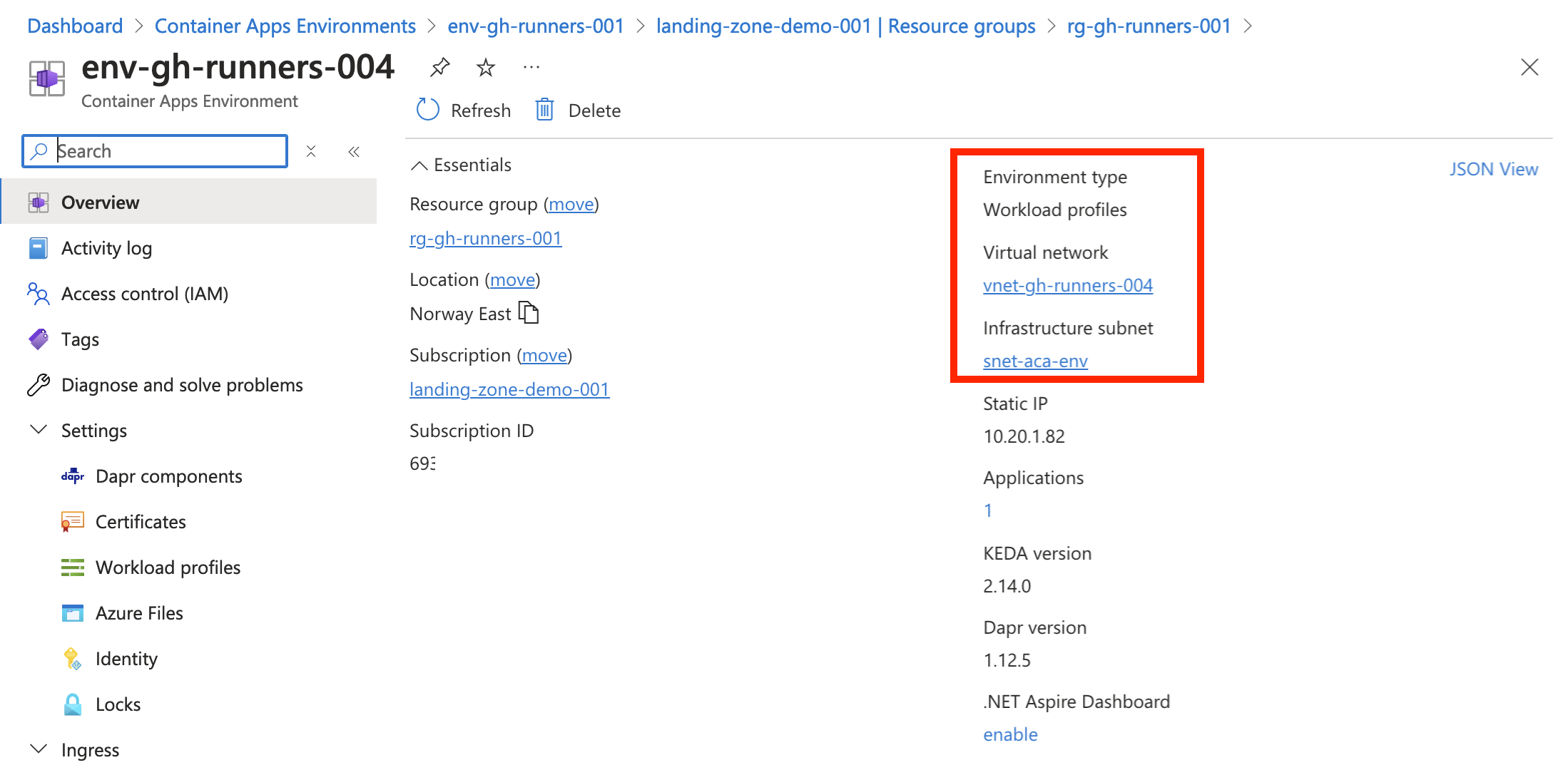

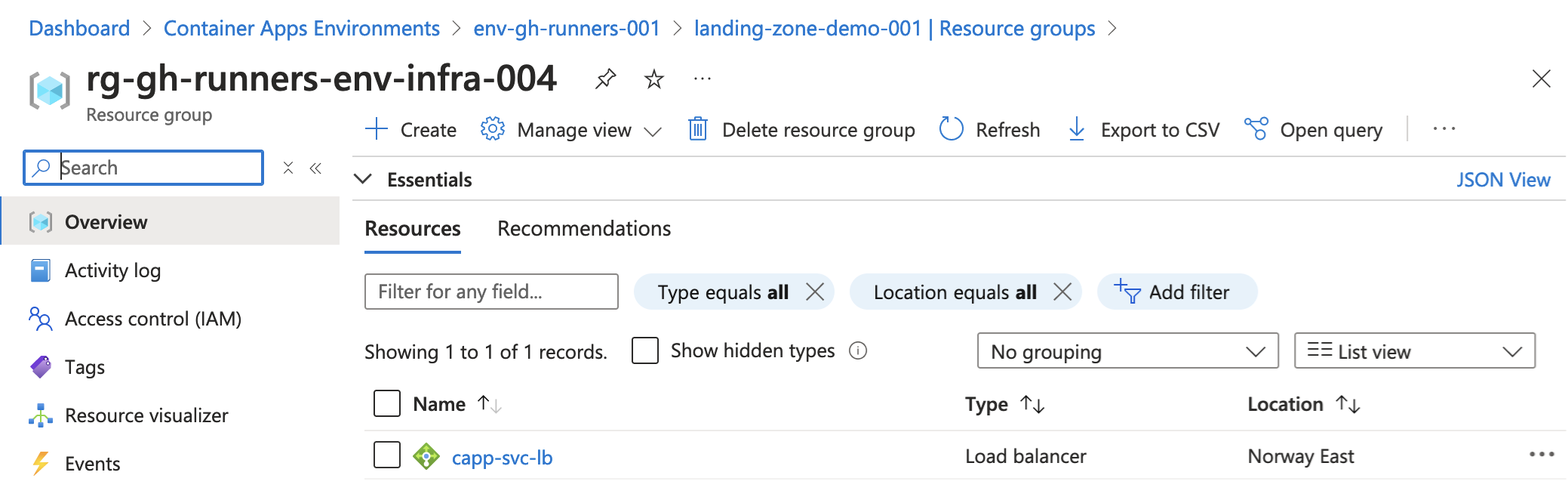

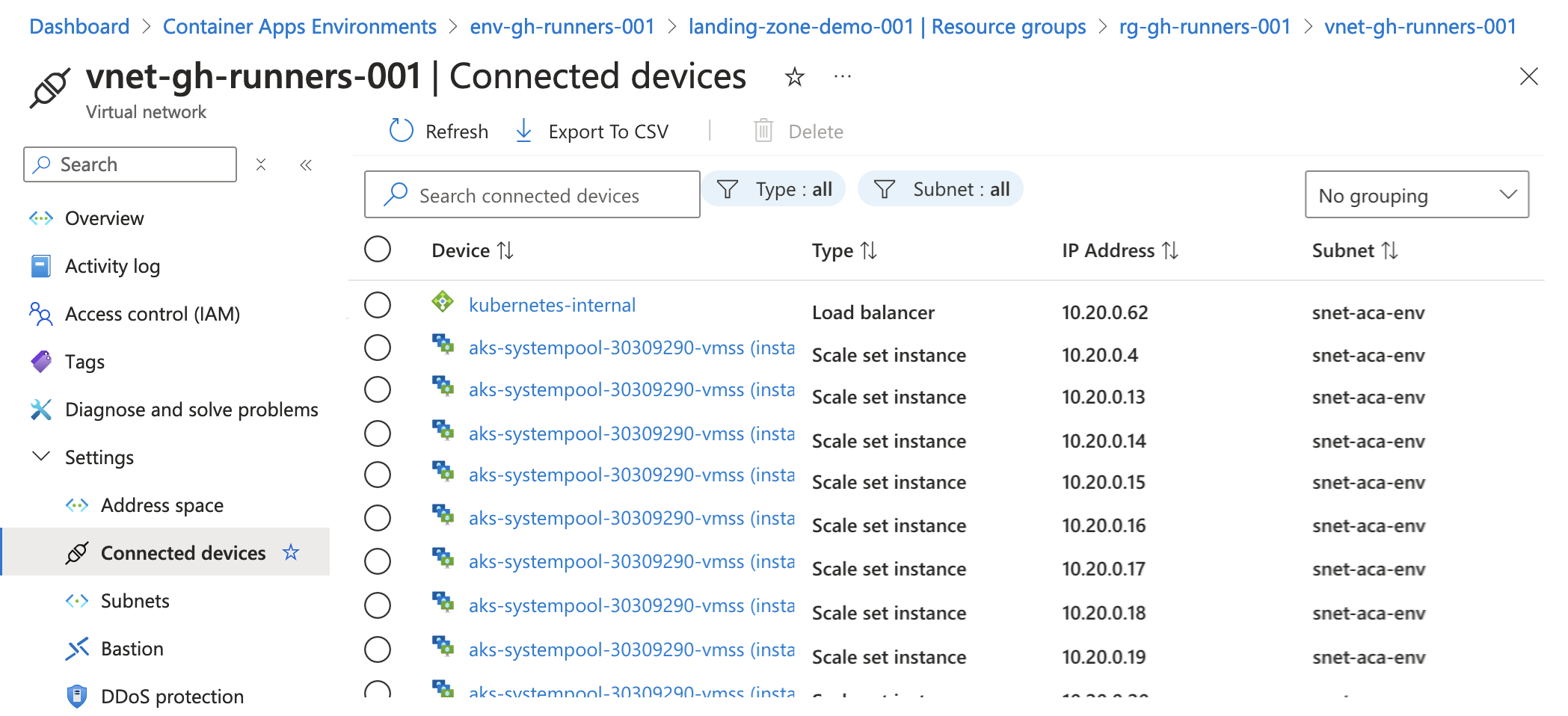

12}Here comes a series of images showcasing some of the differences with vnet integration in the two different environments:

- container environments

- delegation error

- subnet

- resource group with managed infrastructure

- vnet connected devices

Consumption only environment

Workload profiles environment

Closing words

All files for this post can be found in this repository.

This will be the last post in this series for now. Regarding replacement of the PAT in favor of a GitHub App, myoung34’s excellent containerized runner already solves this. If I were to revisit this later, I’d have a try at doing this with powershell instead of bash, as I’m heavily dependent of powershell in my runners.